Data Governance On Databricks

How Does It Work?

Imagine a world where the reins of your data are right in your hands. Where every byte and bit dances to your tune, from who can access it to when and how it's utilized. This may seem like a far-fetched data utopia, but this is the reality that data governance on Databricks strives to achieve.

Through a systematic and comprehensive approach, Databricks provides you with a command center, from where you can orchestrate your entire data lifecycle. And this goes beyond just control. It's about transforming your raw data into a well-oiled machine that drives decision-making and delivers business value.

In this article, we will take you through the landscape of data governance on Databricks.

What Is Data Governance On Databricks?

Think of the data governance framework as your playbook for data management. It's not just a binder of do's and don'ts that collects dust in a drawer; it's your active strategy for making sure data is handled like a VIP at every stage. From ingestion to storage to analysis, each step has its own set of rules, ensuring consistency and security. It's a bit like having a recipe for a complex dish—you can't just toss ingredients in and hope for gourmet results.

In Databricks, this playbook is crucial because the platform is incredibly versatile. You've got engineers, data scientists, and business analysts all tapping into the same data sources. Without proper governance, it’s like a food fight in a kitchen—everyone’s touching everything, and no one knows what’s contaminated.

With Databricks, you can automate many of these governance policies. So it's less "bureaucratic red tape" and more "automated traffic signals" guiding data along the right paths.

How does Databricks governance work?

Here are some key features -

Role-Based Access Control (RBAC): Firstly, RBAC isn't a new concept, but Databricks does it with flair. You can granularly control who gets to see and do what, right down to notebook and data table levels. This isn't just a locked door; it's a complete security system.

Data Cataloging and Metadata Management: What good is a library if you don't know what books it holds? Databricks allows you to create an exhaustive, searchable catalog with rich metadata tagging for enterprise data. It's not just about finding data, it's about understanding its context.

Data Quality Checks: Look, garbage in, garbage out. Databricks lets you incorporate quality checks both during data ingestion and post-transformation. You can define rules and set alerts, effectively automating quality control.

Audit Trails and Compliance: Compliance isn't optional; it's mandatory. Databricks provides comprehensive logging and auditing data access capabilities. You can track data lineage, modifications, and even query histories. If someone did something sketchy, you'll know.

Monitoring and Alerts: Databricks gives you real-time monitoring options for system health, performance, and unauthorized activities. It's like having CCTV for your data. Alerts can be set up to notify you of anything unusual.

Data Governance With Databricks Unity Catalog

- Unity Catalog is Databricks' powerful solution for data governance programs. Designed to manage, secure, and control data and AI assets across any cloud, it's a game-changer for organizations handling extensive data on Databricks.

- Unity Catalog integrates features like centralized data access management, which allows for defining access policies at the account level. This shift lifts the responsibility of governance from each workspace, facilitating communication among them and aligning with the Data Mesh architecture of large organizations.

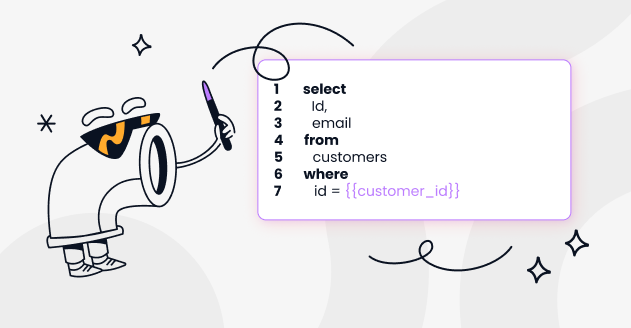

- Moreover, Unity Catalog ensures fine-grained access control. With it, you can set Access Control Lists at the row level and enable column masking, all via standard SQL functions. This flexibility ensures precise control over data accessibility.

- The Unity Catalog also enhances asset discoverability. It presents a data explorer view, enabling data users to swiftly find relevant data assets, with search results tailored based on your access controls.

- An impressive feature of the Unity Catalog is its automated lineage of workloads. It provides comprehensive visibility of data flow in the lakehouse, with access-control-aware lineage graphs. This data lineage can be visualized through the UI or integrated with other catalogs via API calls.

- Additionally, Unity Catalog serves as a Delta sharing server, facilitating secure, scalable data sharing without data replication. This efficient data-sharing alternative makes Unity Catalog a compelling solution for data governance in Databricks.

Wrapping Up

In conclusion, think of Databricks and data governance as the Lennon and McCartney of the data world—individually impressive, but unstoppable together. Databricks offers the horsepower, while governance provides the roadmap; it's a symbiotic relationship that propels your data strategy into overdrive.

What's your next move? If you haven't already, it's high time to schedule a sit-down with your team. Review your current governance policies and see how they can be turbocharged within the Databricks ecosystem.

Subscribe to the Newsletters

About us

We write about all the processes involved when leveraging data assets: from the modern data stack to data teams composition, to data governance. Our blog covers the technical and the less technical aspects of creating tangible value from data.

At Castor, we are building a data documentation tool for the Notion, Figma, Slack generation.

Or data-wise for the Fivetran, Looker, Snowflake, DBT aficionados. We designed our catalog software to be easy to use, delightful and friendly.

Want to check it out? Reach out to us and we will show you a demo.

You might also like

Contactez-nous pour en savoir plus

« J'aime l'interface facile à utiliser et la rapidité avec laquelle vous trouvez les actifs pertinents que vous recherchez dans votre base de données. J'apprécie également beaucoup le score attribué à chaque tableau, qui vous permet de hiérarchiser les résultats de vos requêtes en fonction de la fréquence d'utilisation de certaines données. » - Michal P., Head of Data.

.png)